Installation & Setup

Installation

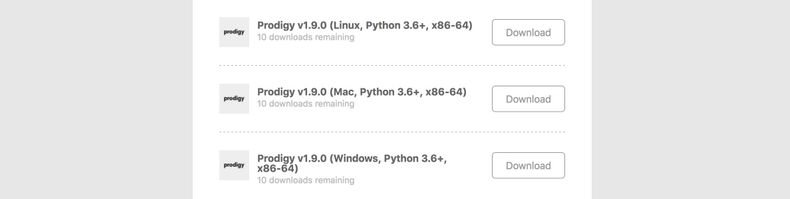

The download link lets you choose between Prodigy wheels for all supported platforms: macOS, Linux and Windows on Python 3.6+. You can download them all and keep them somewhere – they’re all yours! Whenever a new version of Prodigy is available, you’ll be able to download it using the same download link.

Wheel installers are basically pre-compiled Python package installers. You can

install them like any other Python package by pointing pip install at the

local path of the .whl file you downloaded. It’s recommended to use a new

virtual environment for

installing Prodigy.

pip install ./prodigy*.whl

The above command will install the Prodigy package in your current environment,

create your Prodigy home directory and register the commands prodigy and pgy

(a shortcut).

Double-check that you’ve downloaded the correct wheel and make sure your version

of pip is up to date. If it still doesn’t work, check if the file name matches

your platform (distutils.util.get_platform()) and rename the file if

necessary. For more details, see

this article on Python wheels.

Essentially, Python wheels are only archives containing the source files –

so you can also just unpack the wheel and place the contained prodigy

package in your site-packages directory.

On installation, Prodigy will set up the alias commands prodigy and pgy.

This should work fine and out-of-the-box on most macOS and Linux setups. If not,

you can always run the commands by prefixing them with python -m, for example:

python -m prodigy stats. Alternatively, you can create your own alias, and

add it to your .bashrc

to make it permanent:

alias prodigy="python -m prodigy"

On Windows you can also create a Windows activation script as a .ps1 file and

run it in your environment, or add it to your PowerShell profile. See

this thread

for more details.

Function global:pdgy { python -m prodigy $args}

Function global:prodigy { python -m prodigy $args}

Function global:spacy { python -m spacy $args}

By default, Prodigy starts the web server on localhost and port 8080. If

you’re running Prodigy via a Docker container or a

similar containerized environment, you’ll have to set the host to 0.0.0.0.

Simply edit your prodigy.json and add the following:

{

"host": "0.0.0.0"

}

See this thread for more details and background. The above approach should also work in other environments if you come across the following error on startup:

OSError: [Errno 99] Cannot assign requested address

Using and installing spaCy models

To use Prodigy’s built-in recipes for NER or text classification, you’ll also

need to install a spaCy model – for example, the

small English model,

en_core_web_sm (around 34 MB). Note that Prodigy currently requires Python

3.6+ and the latest spaCy v2.2.

python -m spacy download en_core_web_sm

If you have trained your own spaCy model, you can load them into Prodigy using

the path to the model directory. You can also use the

spacy package command to turn it into a

Python package, and install it in your current environment. All Prodigy recipes

that allow a spacy_model argument can either take the name of an installed

model package, or the path to a valid model package directory. Keep in mind that

a new minor version of spaCy also means that you need to retrain your models.

For example, models trained with spaCy v2.1 are not going to be compatible with

v2.2.

Using Prodigy with JupyterLab

If you’re using JupyterLab, you can install our

jupyterlab-prodigy extension.

It lets you execute recipe commands in notebook cells and opens the annotation

UI in a JupyterLab tab so you don’t need to leave your notebook to annotate

data.

jupyter labextension install jupyterlab-prodigy

Configuration

When you first run Prodigy, it will create a folder .prodigy in your home

directory. By default, this will be the location where Prodigy looks for its

configuration file, prodigy.json. You can change this directory via the

environment variable PRODIGY_HOME:

PRODIGY_HOME=/custom/prodigy/home

When you run Prodigy, it will first check if a global configuration file

exists. It will also check the current working directory for a

prodigy.json or .prodigy.json. This allows you to overwrite specific

settings on a project-by-project basis. The following settings can be defined in

your config file, or in the "config" returned by a recipe.

prodigy.json{

"theme": "basic",

"custom_theme": {},

"buttons": ["accept", "reject", "ignore", "undo"],

"batch_size": 10,

"history_size": 10,

"port": 8080,

"host": "localhost",

"cors": true,

"db": "sqlite",

"db_settings": {},

"api_keys": {},

"validate": true,

"auto_exclude_current": true,

"instant_submit": false,

"feed_overlap": true,

"ui_lang": "en",

"project_info": ["dataset", "session", "lang", "recipe_name", "view_id", "label"],

"show_stats": false,

"hide_meta": false,

"show_flag": false,

"instructions": false,

"swipe": false,

"split_sents_threshold": false,

"html_template": false,

"global_css": null,

"javascript": null,

"writing_dir": "ltr",

"show_whitespace": false

}

| Setting | Description | Default |

|---|---|---|

theme | Name of UI theme to use. | "basic" |

custom_theme | Custom UI theme overrides, keyed by name. | {} |

buttons | New: 1.10 Buttons to show at the bottom of the screen in order. If an answer button is disabled, the user will be unable to submit this answer and the keyboard shortcut will be disabled as well. Only the "undo" action will stay available via keyboard shortcut or click on the sidebar history. | ["accept", "reject", "ignore", "undo"] |

batch_size | Number of tasks to return to and receive back from the web app at once. A low batch size means more frequent updates. | 10 |

history_size | New: 1.10 Maximum number of examples to show in the sidebar history. Defaults to the value of batch_size. Note that the history size can’t be larger than the batch size. | 10 |

port | Port to use for serving the web application. Can be overwritten by the PRODIGY_PORT environment variable. | 8080 |

host | Host to use for serving the web application. Can be overwritten by the PRODIGY_HOST environment variable. | "localhost" |

cors | Enable or disable cross-origin resource sharing (CORS) to allow the REST API to receive requests from other domains. | true |

db | Name of database to use. | "sqlite" |

db_settings | Additional settings for the respective databases. | {} |

api_keys | Deprecated: Live API keys, keyed by API loader ID. | {} |

validate | Validate incoming tasks and raise an error with more details if the format is incorrect. | true |

force_stream_order | New: 1.9 Always send out tasks in the same order and re-send them until they’re answered, even if the app is refreshed in the browser. | false |

auto_exclude_current | Automatically exclude examples already present in current dataset. | true |

instant_submit | Instantly submit a task after it’s answered in the app, skipping the history and immediately triggering the update callback if available. | false |

feed_overlap | Whether to send out each example once so it’s annotated by someone (false) or whether to send out each example to every session (true, default). Should be used with custom user sessions set via the app (via /?session=user_name). | true |

ui_lang | New: 1.10 Language of descriptions, messages and tooltips in the UI. See here for available translations. | "en" |

project_info | New: 1.10 Project info shown in sidebar, if available. The list of string IDs can be modified to hide or re-order the items. | ["dataset", "session", "lang", "recipe_name", "view_id", "label"] |

show_stats | Show additional stats, like annotation decision counts, in the sidebar. | true |

hide_meta | Hide the meta information displayed on annotation cards. | false |

show_flag | Show a flag icon in the top right corner that lets you bookmark a task for later. Will add "flagged": true to the task. | false |

instructions | Path to a text file with instructions for the annotator (HTML allowed). Will be displayed as a help modal in the UI. | false |

swipe | Enable swipe gestures on touch devices (left for accept, right for reject). | false |

split_sents_threshold | Minimum character length of a text to be split by the split_sentences preprocessor, mostly used in NER recipes. If false, all multi-sentence texts will be split. | false |

html_template | Optional Mustache template for content in the html annotation interface. All task properties are available as variables. | false |

global_css | CSS overrides added to the global scope. Takes a string value of the CSS code. | null |

javascript | Custom JavaScript added to the global scope. Takes a string value of the JavaScript code. | null |

writing_dir | Writing direction. Mostly important for manual text annotation interfaces. | ltr |

show_whitespace | Always render whitespace characters as symbols in non-manual interfaces. | false |

exclude_by | New: 1.9 Which hash to use ("task" or "input") to determine whether two examples are the same and an incoming example should be excluded. Typically used in recipes. | "task |

Database setup

By default, Prodigy uses SQLite to store annotations in a simple database file in your Prodigy home directory. If you want to use the default database with its default settings, no further configuration is required and you can start using Prodigy straight away. Alternatively, you can choose to use Prodigy with a MySQL or PostgreSQL database, or write your own custom recipe to plug in any other storage solution. For more details, see the database API documentation.

prodigy.json{

"db": "sqlite",

"db_settings": {

"sqlite": {},

"mysql": {},

"postgresql": {}

}

}

| Database | ID | Settings |

|---|---|---|

| SQLite | sqlite | name for database file name (defaults to "prodigy.db"), path for custom path (defaults to Prodigy home directory), plus sqlite3 connection parameters. To only store the database in memory, use ":memory:" as the database name. |

| MySQL | mysql | MySQLdb or PyMySQL connection parameters. |

| PostgreSQL | postgresql | psycopg2 connection parameters. |

Environment variables

Prodigy lets you set the following environment variables:

| Variable | Description |

|---|---|

PRODIGY_HOME | Use a custom path for the Prodigy home directory. Defaults to the equivalent of ~/.prodigy. |

PRODIGY_LOGGING | Enable logging. Values can be either basic (simple logging) or verbose (more details per entry). |

PRODIGY_PORT | Overwrite the port used to serve the Prodigy app and REST API. Supersedes the settings in the global, local and recipe-specific config. |

PRODIGY_HOST | Overwrite the host used to serve the Prodigy app and REST API. Supersedes the settings in the global, local and recipe-specific config. |

PRODIGY_ALLOWED_SESSIONS | Define comma-separated string names of multi-user session names that are allowed in the app. |

PRODIGY_BASIC_AUTH_USER | Add super basic authentication to the app. String user name to accept. |

PRODIGY_BASIC_AUTH_PASS | Add super basic authentication to the app. String password to accept. |

Data validation

Wherever possible, Prodigy will try to validate the data passed around the

application to make sure it has the correct format. This prevents confusing

errors and problems later on – for example, if you use a string instead of a

number for a config argument, or try to train a text classifier on an NER

dataset by mistake. To disable validation you can set "validate": False in

your prodigy.json or recipe config.

Example

✘ Invalid data for component 'ner' spans field required {'text': ' Rats and Their Alarming Bugs', 'meta': {'source': 'The New York Times', 'score': 0.5056365132}, 'label': 'ENTERTAINMENT', '_input_hash': 1817790242, '_task_hash': -2039660589, 'answer': 'accept'}

| Stream | Validate each incoming task in the stream for the given annotation interface. |

| Prodigy config | New: 1.9 Validate the global, local and recipe config settings on startup. |

| Recipe | New: 1.9 Validate the dictionary of components returned by a recipe. |

| Training examples | New: 1.9 Validate training examples for the given component in train, train-curve and data-to-spacy. |

Debugging and logging

If Prodigy raises an error, or you come across unexpected results, it’s often

helpful to run the debugging mode to keep track of what’s happening, and how

your data flows through the application. Prodigy uses the

logging module to provide

logging information across the different components. The logging mode can be set

as the environment variable PRODIGY_LOGGING. Both logging modes log the same

events, but differ in the verbosity of the output.

| Logging mode | Description |

|---|---|

basic | Only log timestamp and event description with most important data. |

verbose | Also log additional information, like annotation data and function arguments, if available. |

PRODIGY_LOGGING=basic

prodigy

ner.manual

my_dataset

en_core_web_sm

./my_data.jsonl

--label PERSON,ORG

$ PRODIGY_LOGGING=basic prodigy ner.teach test en_core_web_sm "rats" --api guardian

13:11:39 - DB: Connecting to database 'sqlite'

13:11:40 - RECIPE: Calling recipe 'ner.teach'

13:11:40 - RECIPE: Starting recipe ner.teach

13:11:40 - LOADER: Loading stream from API 'guardian'

13:11:40 - LOADER: Using API 'guardian' with query 'rats'

13:11:42 - MODEL: Added sentence boundary detector to model pipeline

13:11:43 - RECIPE: Initialised EntityRecognizer with model en_core_web_sm

13:11:43 - SORTER: Resort stream to prefer uncertain examples (bias 0.8)

13:11:43 - PREPROCESS: Splitting sentences

13:11:44 - MODEL: Predicting spans for batch (batch size 64)

13:11:44 - Model: Sorting batch by entity type (batch size 32)

13:11:44 - CONTROLLER: Initialising from recipe

13:11:44 - DB: Loading dataset 'test' (168 examples)

13:11:44 - DB: Creating dataset '2017-11-20_13-07-44'

✨ Starting the web server at http://localhost:8080 ...

Open the app in your browser and start annotating!

13:11:59 - GET: /project

13:11:59 - GET: /get_questions

13:11:59 - CONTROLLER: Iterating over stream

13:11:59 - Model: Sorting batch by entity type (batch size 32)

13:11:59 - CONTROLLER: Returning a batch of tasks from the queue

13:11:59 - RESPONSE: /get_questions (10 examples)

13:12:07 - GET: /get_questions

13:12:07 - CONTROLLER: Returning a batch of tasks from the queue

13:12:07 - RESPONSE: /get_questions (10 examples)

13:12:07 - POST: /give_answers (received 7)

13:12:07 - CONTROLLER: Receiving 7 answers

13:12:07 - MODEL: Updating with 7 examples

13:12:08 - MODEL: Updated model (loss 0.0172)

13:12:08 - PROGRESS: Estimating progress of 0.0909

13:12:08 - DB: Getting dataset 'test'

13:12:08 - DB: Getting dataset '2017-11-20_13-07-44'

13:12:08 - DB: Added 7 examples to 2 datasets

13:12:08 - CONTROLLER: Added 7 answers to dataset 'test' in database SQLite

13:12:08 - RESPONSE: /give_answers

13:12:11 - DB: Saving database

Saved 7 annotations to database SQLite

Dataset: test

Session ID: 2017-11-20_13-07-44

Custom logging

If you’re developing custom recipes, you can use Prodigy’s log helper function

to add your own entries to the log. The log function takes the following

arguments:

| Argument | Type | Description |

|---|---|---|

message | str | The basic message to display, e.g. “RECIPE: Starting recipe ner.teach”. |

details | - | Optional details to log only in verbose mode. |

recipe.pypseudocode import prodigy

@prodigy.recipe("custom-recipe")

def my_custom_recipe(dataset, source):

progigy.log("RECIPE: Starting recipe custom-recipe", locals())

stream = load_my_stream(source)

prodigy.log(f"RECIPE: Loaded stream with argument {source}")

return {

"dataset": dataset,

"stream": stream

}

Customizing Prodigy with entry points

Entry points let you expose parts of a Python package you write to other Python

packages. This lets one application easily customize the behavior of another, by

exposing an entry point in its setup.py or setup.cfg. For a quick and fun

intro to entry points in Python, check out

this excellent blog post.

Prodigy can load custom function from several different entry points, for

example custom recipe functions. To see this in action, check out the

sense2vec package, which provides

several custom Prodigy recipes. The recipes are registered automatically if you

install the package in the same environment as Prodigy. The following entry

point groups are supported:

prodigy_recipes | Entry points for recipe functions. |

prodigy_db | Entry points for custom Database classes. |

prodigy_loaders | Entry points for custom loader functions. |